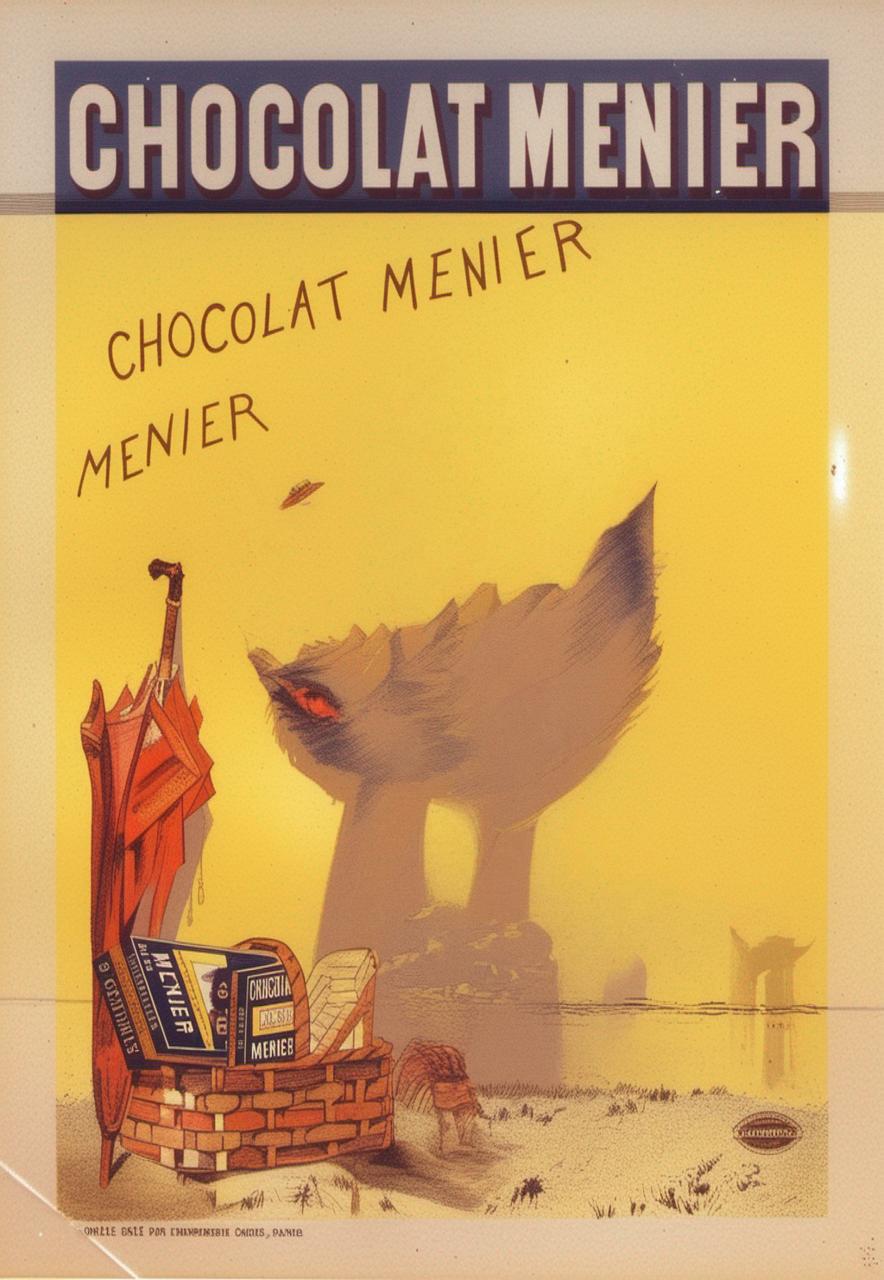

First, we segment the latent representations of the source images into multiple layers, which include several object layers and one incomplete background layer that necessitates reliable inpainting. To avoid extra tuning, we further explore the inner inpainting ability within the self-attention mechanism. We introduce a key-masking self-attention scheme that can propagate the surrounding context information into the masked region while mitigating its impact on the regions outside the mask.

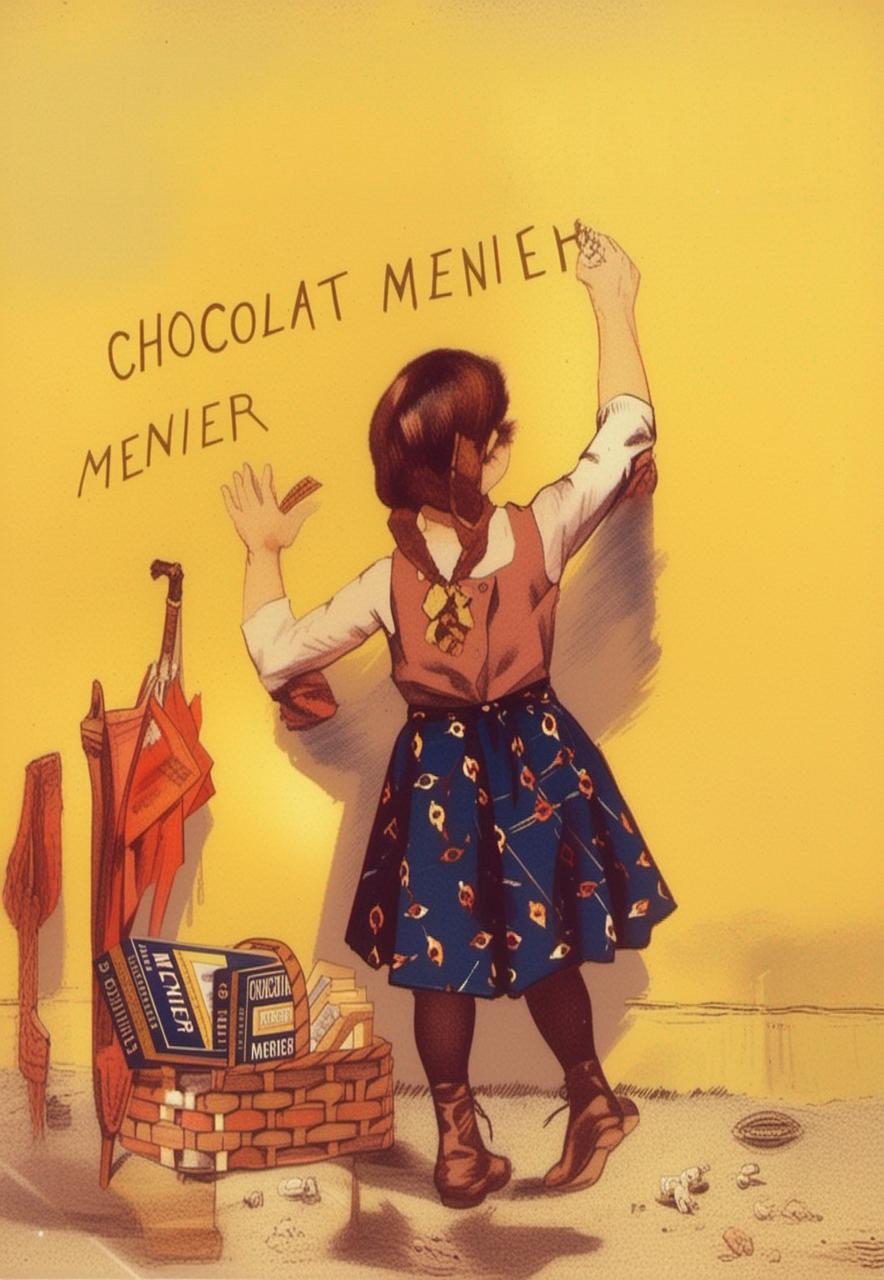

Second, we propose an instruction-guided latent fusion that pastes the multi-layered latent representations onto a canvas latent. We also introduce an artifact suppression scheme in the latent space to enhance the inpainting quality. Due to the inherent modular advantages of such multi-layered representations, we can achieve accurate image editing, and we demonstrate that our approach consistently surpasses the latest spatial editing methods, including Self-Guidance and DiffEditor.

Lastly, we show that our approach is a unified framework that supports various accurate image editing tasks on more than six different editing tasks.

@misc{jia2024designedit,

title={DesignEdit: Multi-Layered Latent Decomposition and Fusion for Unified & Accurate Image Editing},

author={Yueru Jia and Yuhui Yuan and Aosong Cheng and Chuke Wang and Ji Li and Huizhu Jia and Shanghang Zhang},

year={2024},

eprint={2403.14487},

archivePrefix={arXiv},

primaryClass={cs.CV}

}